Smooth Reinforcement Learning Based Trajectory Tracking for Articulated Vehicles

Abstract

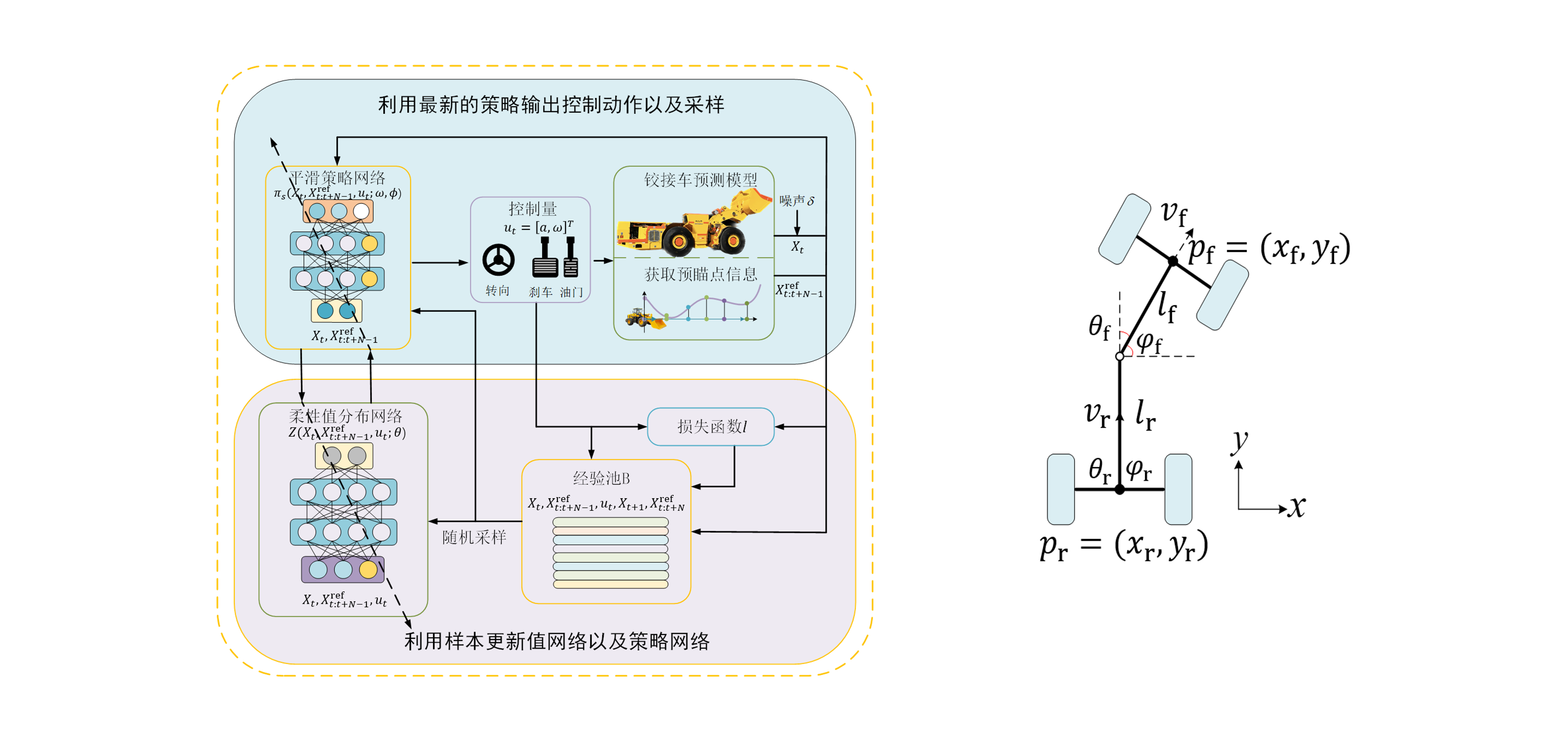

This study addresses the problem of action fluctuation in the trajectory tracking control of articulated vehicles, proposing a smooth tracking control method based on Reinforcement Learning (RL). To enhance control accuracy, we incorporate trajectory preview information as input to both the policy and value networks, establishing a predictive policy iteration framework. In our pursuit to ensure control smoothness, we employ the LipsNet network to constrain the Lipschitz constant of the policy. Coupled with distributional RL theory, we formulate an articulated vehicle trajectory tracking control algorithm, resulting in a synergistic optimization of control precision and action smoothness. Our simulation results demonstrate that the proposed smooth RL tracking control method delivers high tracking accuracy and online computational efficiency, while consistently maintaining control smoothness across diverse noise levels, Compared with the traditional DSAC, the smooth DSAC action fluctuation is reduced by about 5.82 times.