Biography

I am a PhD student in the Mobility Transformation Lab at the University of Michigan. Before that, I received a MSc from the Intelligent Driving Lab at Tsinghua University. My research covers neural network, reinforcement learning, autonomous driving, and quantum computing. I am dedicated to building more intelligent and safer AI for automated vehicles, while also developing the next-generation paradigm for neural network training.

- Reinforcement Learning

- Autonomous Driving

- Quantum Computing

University of Michigan

Ph.D in Civil Engineering & Scientific Computing

Tsinghua University

M.Sc in Mechanical Engineering

Recent News

⚡

🎉

🎉

🎉

🎉

🎉

🎉

Experiences

Featured Publications

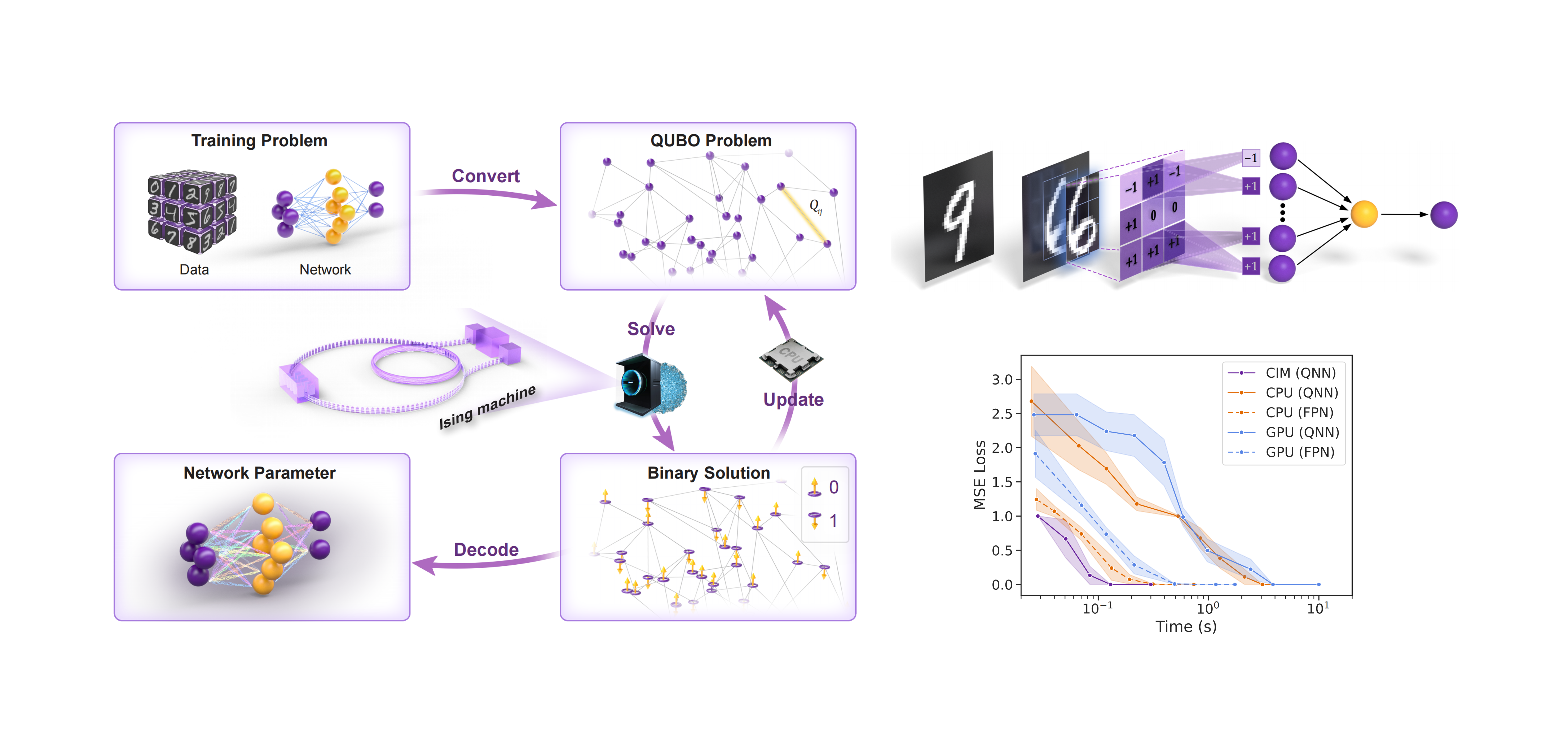

We proposed Ising learning algorithm, the first technique to train multilayer feedforward neural networks on Ising machines (quantum computers). The training time is reduced by 90% compared to CPU/GPU.

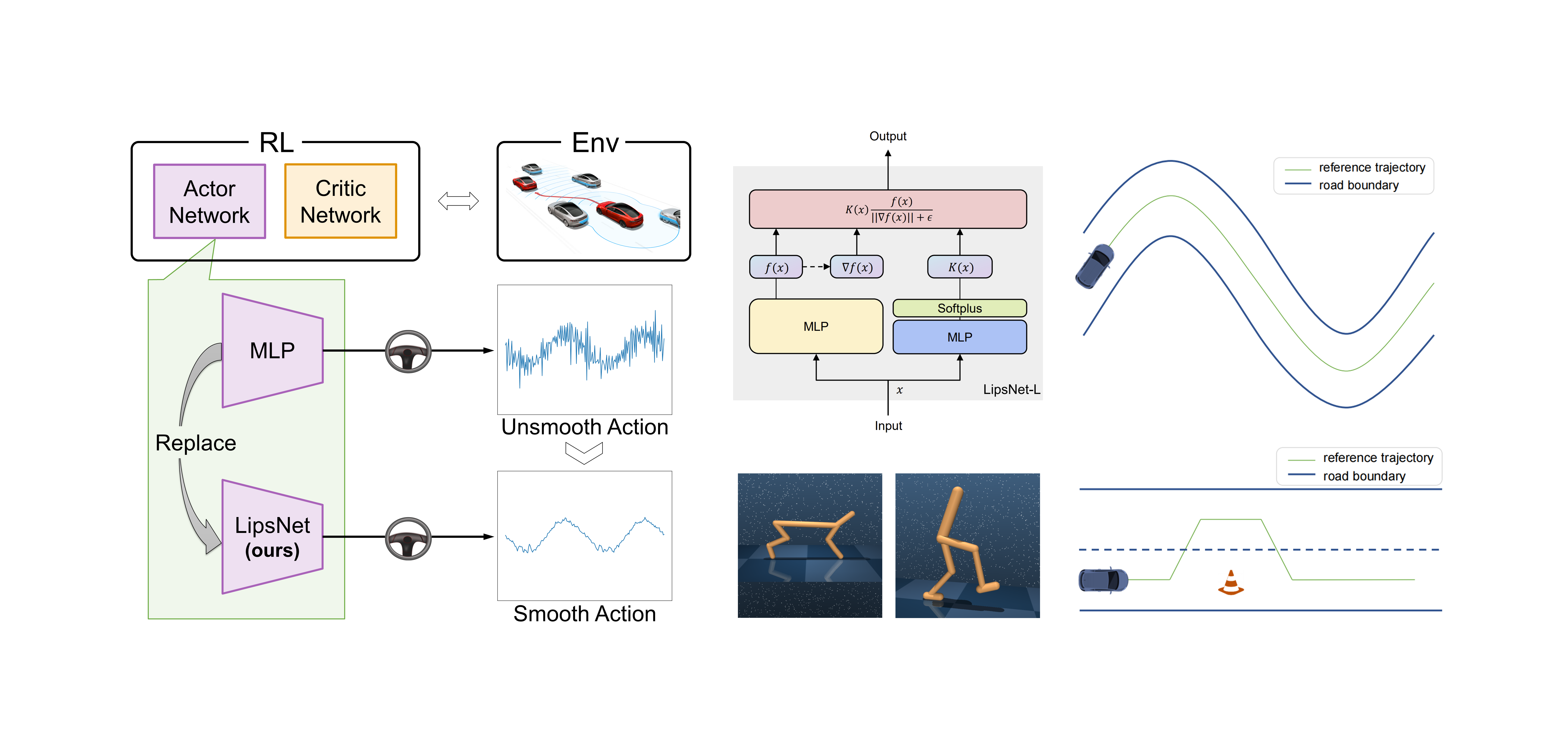

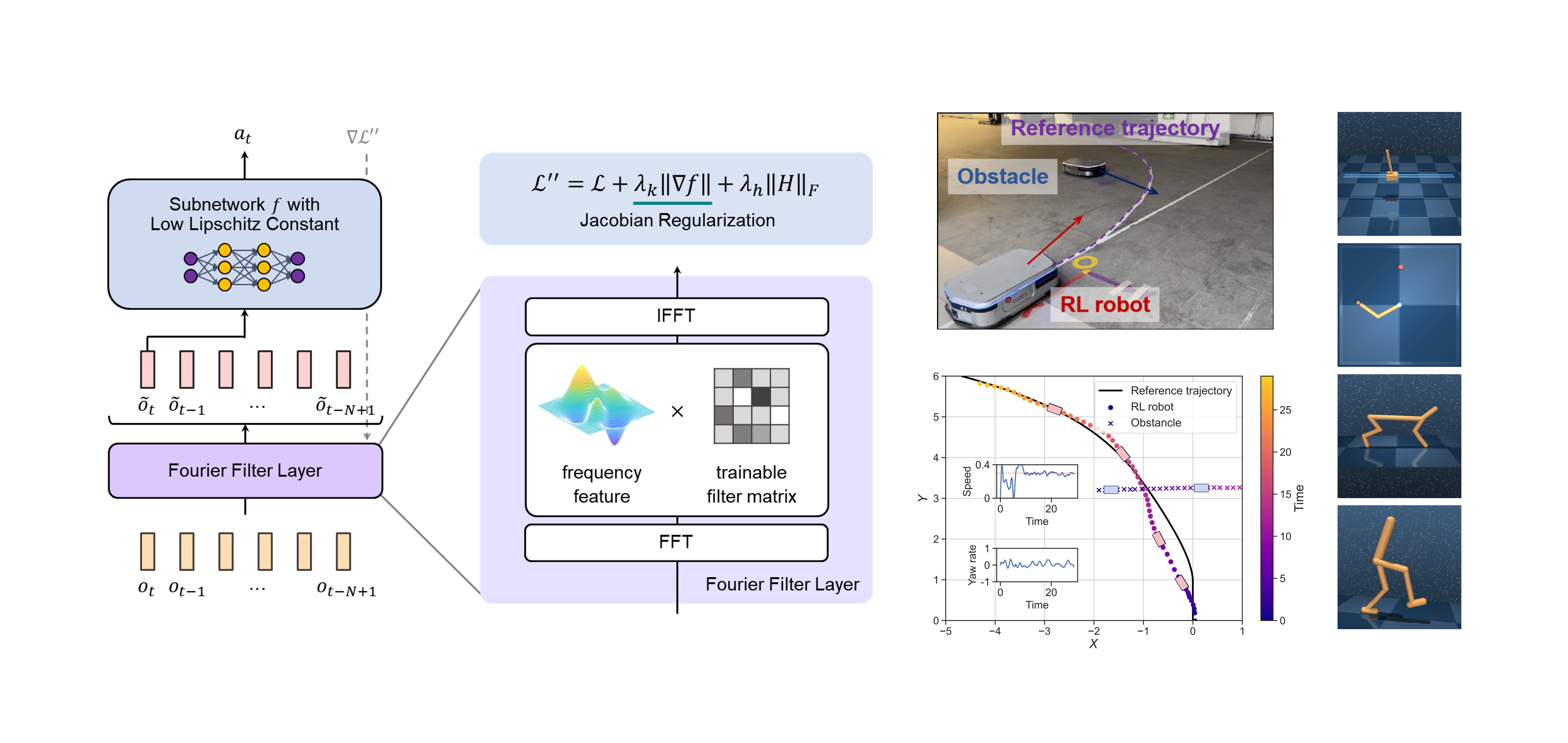

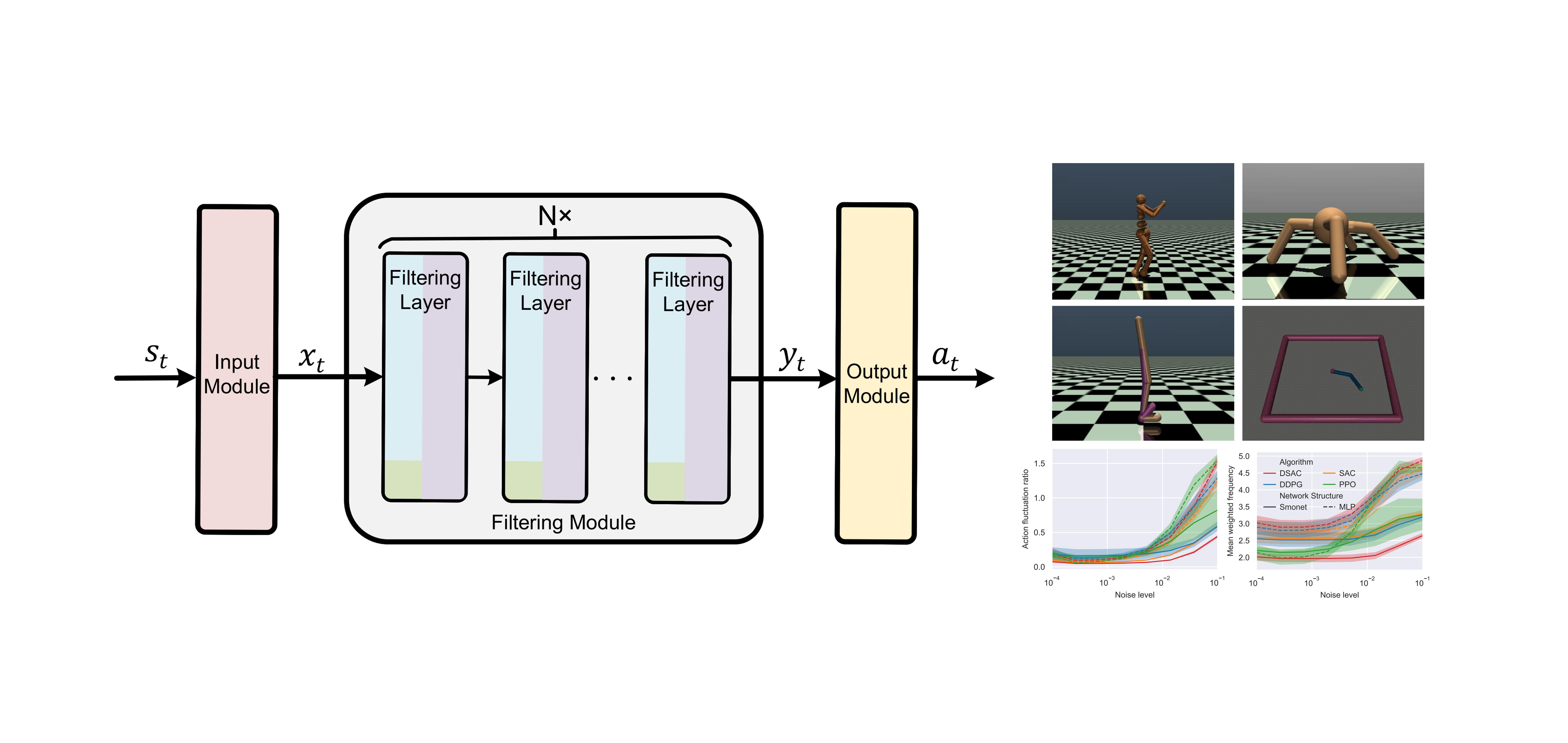

We unified the filtering and control capabilities into a single policy network in RL, achieving SOTA noise robustness and action smoothness in real-world control tasks.

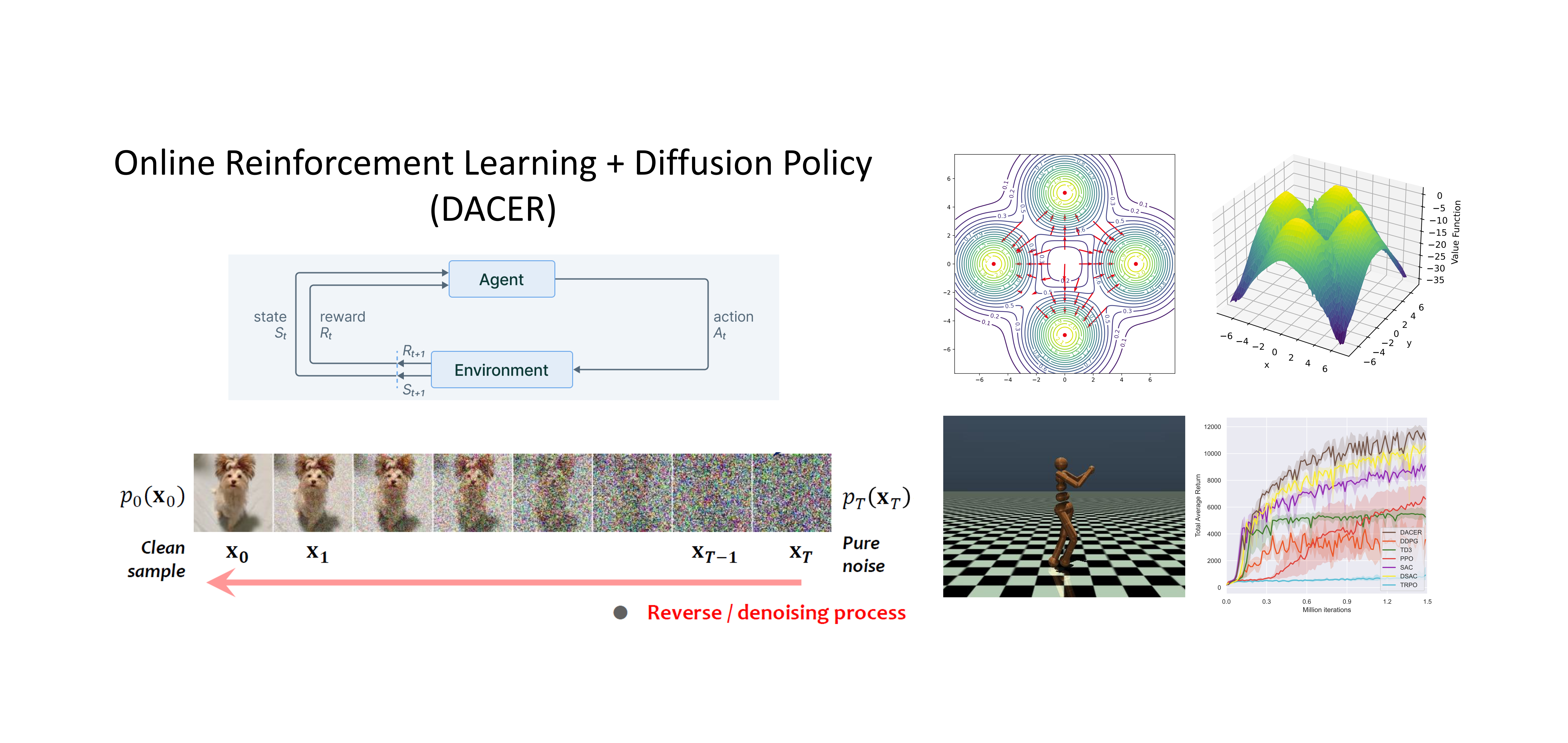

We proposed DACER, an online reinforcement learning algorithm that utilizes a diffusion model as the actor network to enhance the representational capacity of the policy.

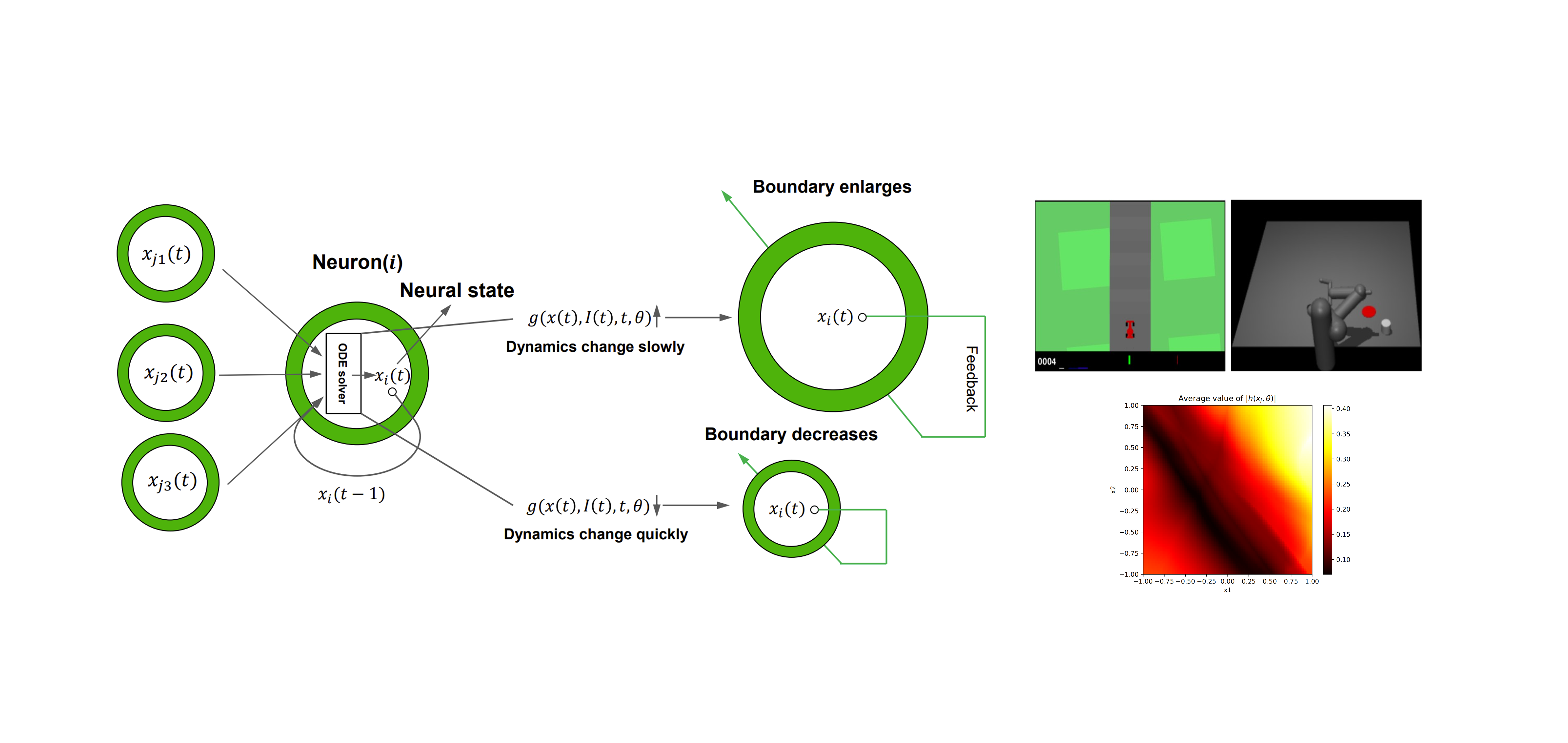

we proposed a variant of neural ODE, called SmODE, to smooth out control actions in RL. A mapping function is incorporated to estimate the changing speed of system dynamics.

We proposed a policy network for RL with low-pass filtering ability, named Smonet, to alleviate the action nonsmoothness issue by learning a low-frequency representation within hidden layers.

Publications

Competition Awards

Honors & Scholarships

Contact

- xjsong99@gmail.com

- University of Michigan, Ann Arbor, MI 48109