Abstract

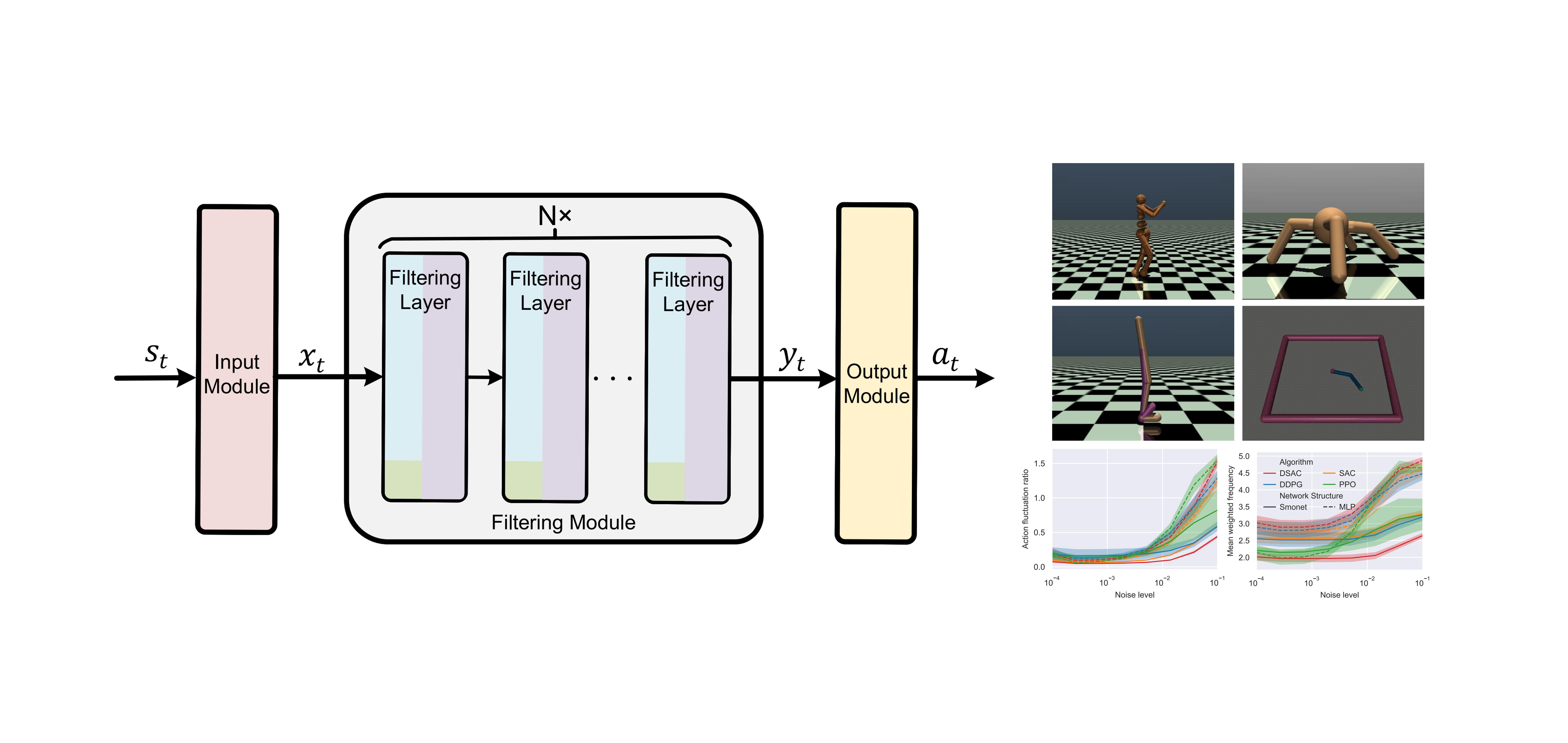

Reinforcement learning (RL) has demonstrated considerable potential in addressing intricate control and decision problems such as vehicle tracking control and obstacle avoidance. Nonetheless, the control policies acquired through RL often lack smoothness even in the presence of minor noises or disturbances, which may induce oscillations, overheating, and even damage to the system in real-world applications. Existing methods handle this issue from temporal or spatial domains and are often tightly coupled with specific tasks. This paper studies the smoothness of control policy from the frequency domain perspective. We propose a class of neural networks with low-pass filtering ability, named Smonet, to alleviate the non-smooth issue by learning a low-frequency representation within hidden layers. Smonet features with serial filtering layers responsible for low-pass filtering of the input signal. Each filtering layer contains multiple inertia cells, one adaptive cell, and one activation layer. To facilitate the filtering ability of Smonet, we further proposed a Smonet-based RL training method by integrating an extra regularization term relating to filtering factors to standard RL loss. Finally, we assess the efficacy of Smonet through diverse simulated robot control tasks and a real-world mobile robot obstacle avoidance experiment, comparing its performance with two commonly utilized networks, multi-layer perceptron and gated recurrent unit. Results indicate that Smonet consistently enhances policy smoothness under various observation noises without compromising control performance. Notably, it achieves up to a 72.7% reduction in the action fluctuation ratio compared to traditional network structures.