A Smooth Reinforcement Learning Method for Trajectory Tracking and Collision Avoidance of Wheeled Vehicle

Abstract

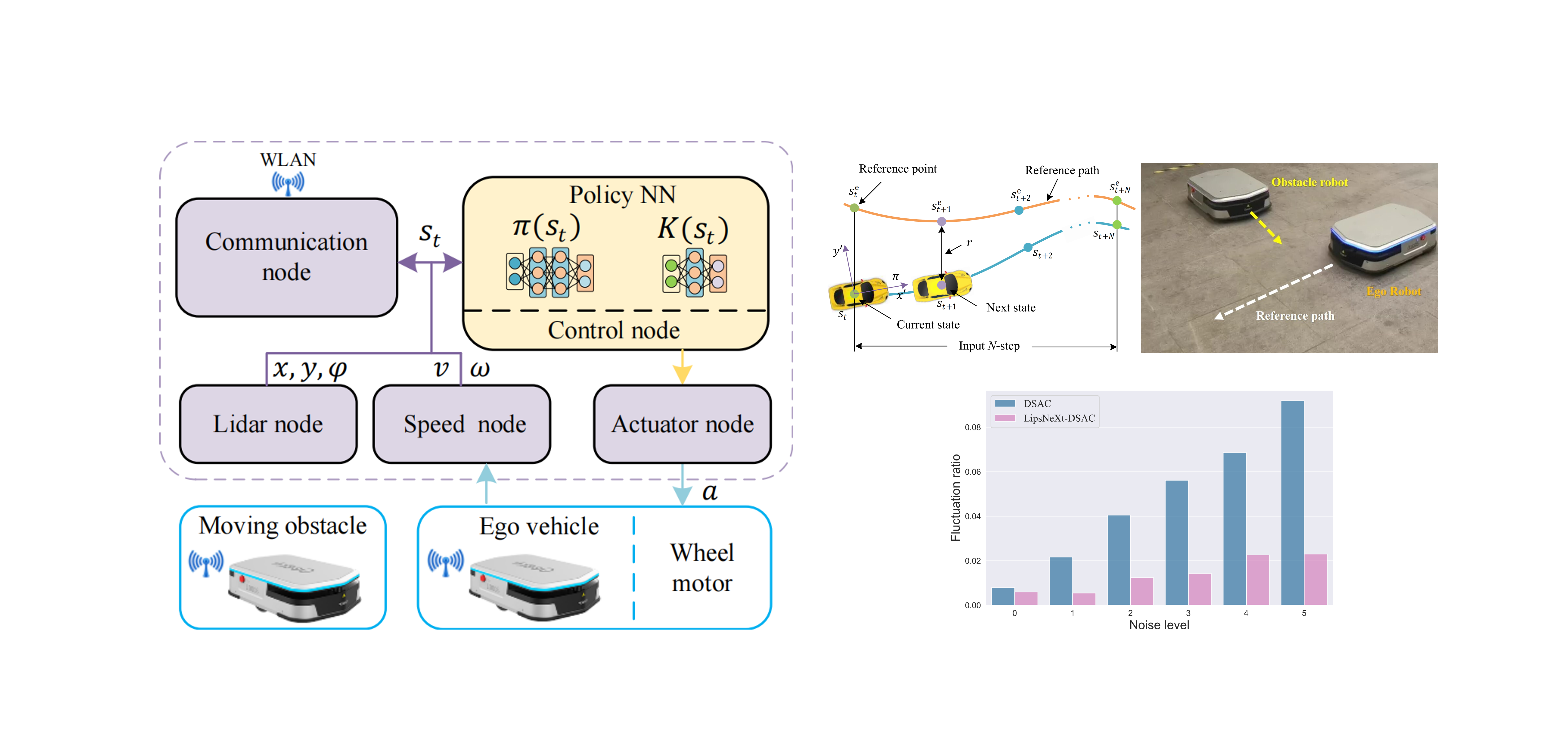

Reinforcement learning (RL) plays a pivotal role in solving complex tasks such as vehicle trajectory tracking and collision avoidance. However, RL-derived control policies often suffer from a lack of smoothness, leading to abrupt changes in control actions in response to minor state variations, which can accelerate component degradation and lead to system failures in real-world scenarios. To mitigate this, we propose a novel neural network architecture named LipsNeXt, designed to smooth actions across multiple dimensions. LipsNeXt dynamically regulates individual Lipschitz constants for each component of the action vector using a trainable parameterized network, thereby improving control smoothness. Building on this, we merge LipsNeXt with distributional soft actor-critic (DSAC) to create a new RL algorithm, LipsNeXt-DSAC. This algorithm incorporates an L2 regularization term linked to the Lipschitz constants of action components into the policy optimization objective, striving for an optimal balance between control efficacy and smoothness. Experimental trials on actual wheeled vehicles reveal that LipsNeXt-DSAC not only improves action smoothness but also significantly enhances position tracking precision, surpassing conventional smooth network architectures like LipsNet.