Abstract

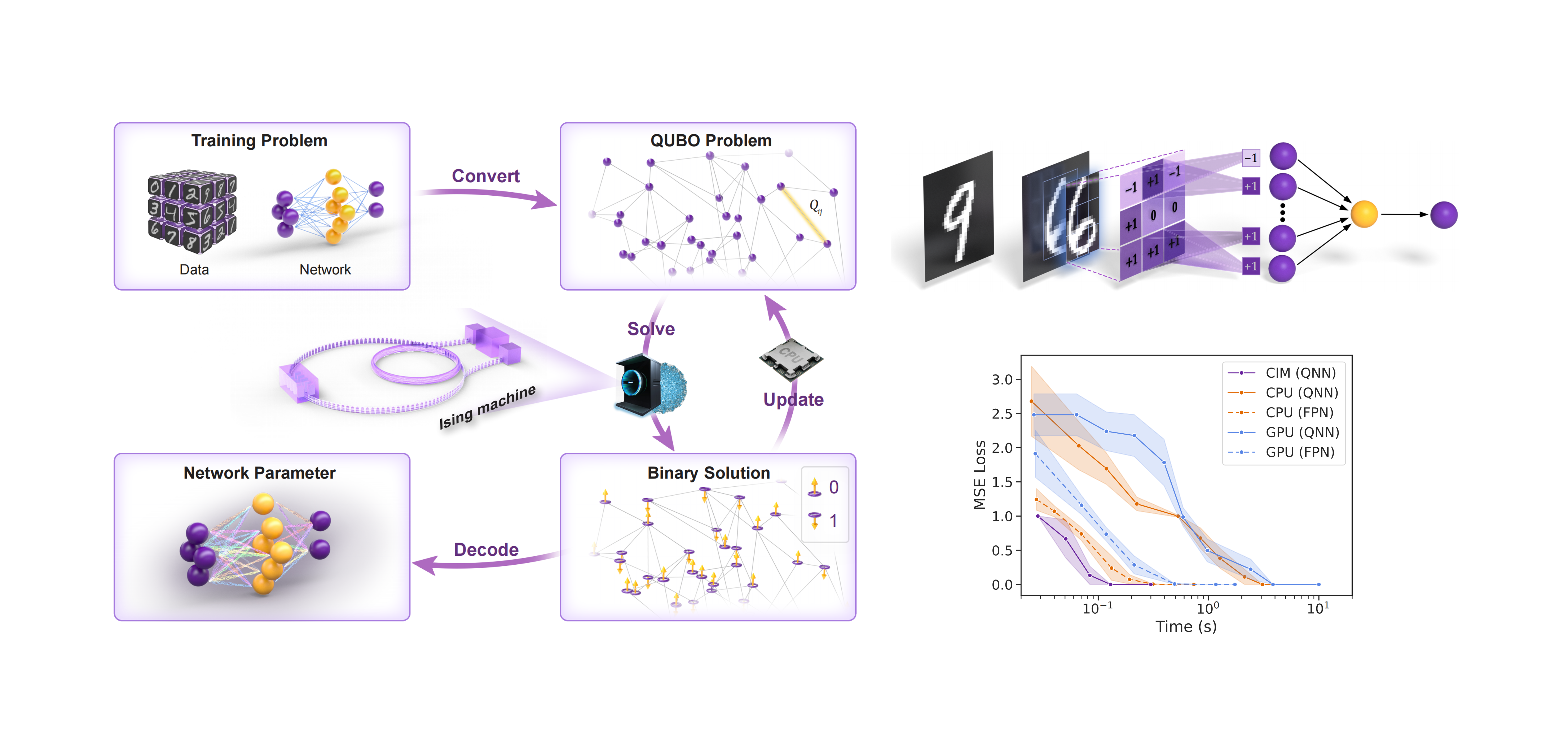

As dedicated quantum devices, Ising machines can solve large-scale binary optimization problems in milliseconds. There is emerging interest in utilizing Ising machines to train feedforward neural networks due to the prosperity of generative artificial intelligence. However, existing methods are limited to training single-layer feedforward networks because of their inability to handle complex nonlinear network topologies. This paper proposes an Ising learning algorithm to train a quantized neural network (QNN) by incorporating four essential techniques, namely, constraint representation of network topology, binary representation of variables, augmented Lagrange iteration, and Rosenberg order reduction. Firstly, QNN training is formulated as a quadratic constrained binary optimization (QCBO) problem by representing neuron connections and activation functions as constraints. All the quantized variables are encoded by binary bits based on a binary encoding protocol. Secondly, the QCBO problem is converted into a quadratic unconstrained binary optimization (QUBO) problem, which can be efficiently solved on Ising machines. This conversion leverages the augmented Lagrange method and Rosenberg order reduction, which together eliminate constraints and reduce high-order loss function to a quadratic function. The algorithm alternates between solving the QUBO problem and updating the Lagrange multipliers until convergence. With reasonable assumptions, the space complexity of our algorithm is $\mathcal{O}(H^2L + HLN\log H)$, quantifying the required number of spins. Finally, the algorithm is validated using a physical coherent Ising machine on MNIST dataset. After training 85 ms, the classification accuracy reached 87.5%. The results show that the algorithm accelerated QNN training by over 10 times compared to the backpropagation on CPU and GPU, reducing convergence time by more than 90%. With the increasing number of spins on Ising machine, our algorithm has the potential to train deeper neural networks.